Applicazioni Java con MongoDB su AWS

Introduzione

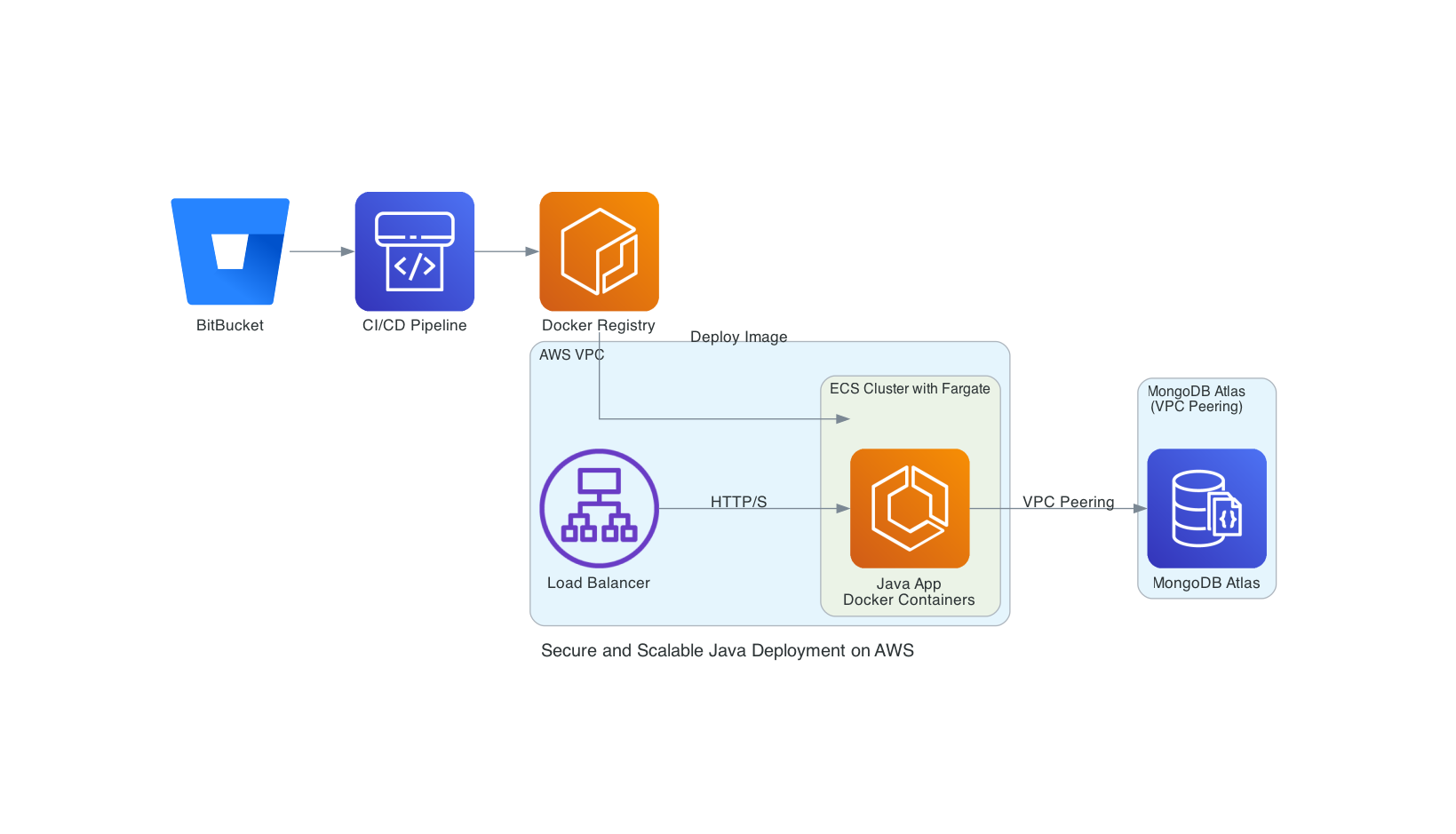

Questo articolo descrive un’architettura per il deployment di applicazioni Java su AWS, progettata per garantire scalabilità, sicurezza e automazione. Attraverso Docker per la pacchettizzazione, AWS ECS con Fargate per l’orchestrazione e MongoDB Atlas per il database, l'infrastruttura supporta un modello di deployment blue-green, riducendo i tempi di inattività. La pipeline CI/CD, realizzata con BitBucket e Maven, integra unit e integration test per assicurare stabilità ad ogni rilascio, offrendo un ambiente sicuro e scalabile.  Il diagramma sopra riassume i flussi principali descritti nell'articolo.

Il diagramma sopra riassume i flussi principali descritti nell'articolo.

Pacchettizzazione delle Applicazioni con Docker

L'architettura si basa su Docker per la creazione di immagini delle applicazioni Java, assicurando la portabilità e consistenza tra ambienti. Questo approccio consente di eliminare problemi legati alle dipendenze e alle configurazioni, mantenendo coerenza tra sviluppo, testing e produzione. Le immagini Docker vengono generate automaticamente nelle pipeline di BitBucket e archiviate su un registry privato su AWS ECR, pronte per il deployment in AWS ECS.

Build e Testing con Maven

Il processo di build è automatizzato tramite Maven, che gestisce sia la compilazione del codice che l’esecuzione dei test. Ogni commit o push al repository attiva una pipeline CI/CD in BitBucket, che avvia una serie di operazioni:

- Compilazione e Verifica delle Dipendenze: Maven verifica che tutte le dipendenze del progetto siano presenti e aggiornate, garantendo che l'ambiente di build sia completo.

- Unit Testing: Viene avviata una serie di test unitari automatizzati utilizzando JUnit. I test unitari sono fondamentali per verificare il comportamento di singole unità di codice in isolamento, garantendo che ogni componente dell'applicazione funzioni come previsto.

- Integration Testing: Una volta completati i test unitari, la pipeline procede con i test di integrazione. I test di integrazione verificano che i vari componenti dell'applicazione funzionino correttamente quando combinati, coprendo le interazioni tra servizi, database e altre dipendenze esterne.

Questa fase di testing permette di individuare e risolvere errori in modo proattivo, assicurando una versione stabile prima della creazione delle immagini Docker.

Orchestrazione dei Container con AWS ECS e Fargate

Per gestire e orchestrare i container, viene utilizzato AWS Elastic Container Service (ECS) in modalità Fargate. L’adozione di Fargate elimina la necessità di configurare e gestire l'infrastruttura a livello di sistema operativo. Questo approccio consente di specificare solamente le risorse necessarie per ogni container, delegando ad AWS la gestione della sicurezza e dell’allocazione delle risorse.

In pratica, ogni servizio in esecuzione su ECS è definito da un task Docker che specifica le risorse allocate (memoria, CPU) e le configurazioni di rete. Questo setup permette di scalare i container in modo automatico in risposta al traffico, mantenendo una gestione efficiente delle risorse.

Sicurezza della Rete con VPC e VPC-Peering

L'intero cluster ECS è isolato all'interno di una Virtual Private Cloud (VPC) e comunica con la VPC di MongoDB Atlas tramite VPC-peering. Questo approccio evita qualsiasi esposizione del database a Internet, proteggendo le risorse sensibili. La comunicazione tra i container ECS e MongoDB avviene esclusivamente all'interno di questa rete privata. Le uniche porte aperte sono 80 (HTTP) e 443 (HTTPS), accessibili tramite un Application Load Balancer (ALB) che distribuisce il traffico verso ECS. Questo livello di protezione assicura che solo il traffico autorizzato possa raggiungere i container, mantenendo un elevato standard di sicurezza.

Database Gestito con MongoDB Atlas

Per la gestione del database, viene utilizzato MongoDB Atlas, una piattaforma gestita che offre un’infrastruttura scalabile e semplificata per i database MongoDB. Atlas offre funzionalità integrate di backup, ripristino e monitoraggio, garantendo un livello di affidabilità e scalabilità avanzato. Grazie al peering VPC, il traffico tra ECS e MongoDB è interamente contenuto nella rete privata, migliorando la sicurezza della connessione senza richiedere configurazioni avanzate.

Continuous Integration e Continuous Deployment con BitBucket Pipelines

BitBucket ospita il codice sorgente e gestisce il processo CI/CD attraverso una pipeline automatizzata. Ogni volta che viene effettuato un push nel repository, la pipeline di BitBucket avvia una serie di operazioni che coprono l'intero ciclo di vita del rilascio:

- Build e Testing: Come descritto, Maven compila il codice, esegue i test unitari e di integrazione. Se tutti i test sono superati, viene creata un’immagine Docker dell'applicazione.

- Distribuzione dell’Immagine Docker: L'immagine Docker viene quindi caricata su un repository Docker privato (AWS ECR), pronto per il deployment.

- Blue-Green Deployment su ECS: Infine, l’immagine viene distribuita su ECS utilizzando un approccio di blue-green deployment. In questo modello, il sistema crea una versione parallela della nuova applicazione in un ambiente di staging (verde), mantenendo attiva la versione corrente in produzione (blu). Appena la nuova versione dell'applicazione inizia a rispondere ai messaggi di ping, il traffico viene progressivamente reindirizzato verso quest’ultima. Questo approccio permette di annullare rapidamente il rilascio, reindirizzando il traffico verso il cluster blu, in caso di problemi con la nuova versione.

Vantaggi del Blue-Green Deployment

Il blue-green deployment riduce i tempi di inattività e semplifica il rollback in caso di errori. Mantenendo sempre due ambienti paralleli, è possibile eseguire aggiornamenti in produzione senza interruzioni e testare nuove versioni in sicurezza. Quando la nuova versione è pronta, basta reindirizzare il traffico al cluster verde, con la possibilità di tornare rapidamente al cluster blu se necessario.

Conclusioni

L'architettura descritta rappresenta una soluzione sicura e scalabile per il deployment di applicazioni Java containerizzate su AWS, combinando Docker, AWS ECS con Fargate, MongoDB Atlas e BitBucket Pipelines. Questo setup offre:

- Un processo di build e testing strutturato con Maven e JUnit.

- Un deployment automatizzato, sicuro e scalabile.

- Un'infrastruttura isolata e protetta grazie alla gestione delle reti VPC e VPC-peering.

- Un sistema di aggiornamento continuo e rollback rapido con il modello di blue-green deployment.

Questa configurazione consente di mantenere l'infrastruttura agile e sicura, rispondendo in modo efficiente alle necessità di deployment delle applicazioni distribuite in ambienti cloud.

Riferimenti

- Docker - <https: www.docker.com="">

- AWS Elastic Container Service (ECS) - <https: aws.amazon.com="" ecs="">

- AWS Fargate - <https: aws.amazon.com="" fargate="">

- AWS Elastic Container Registry (ECR) - <https: aws.amazon.com="" ecr="">

- MongoDB Atlas - <https: www.mongodb.com="" atlas="">

- BitBucket - <https: bitbucket.org="">

- Maven - <https: maven.apache.org=""> </https:></https:></https:></https:></https:></https:></https:>